Highly Available Web Service Deployment with Ceph (Reef) RBD, Kubernetes and Nginx

【代码】Highly Available Web Service Deployment with Ceph (Reef) RBD, Kubernetes and Nginx。

Highly Available Web Service Deployment with Ceph (Reef) RBD, Kubernetes and Nginx

1. Background

In containerized applications, seamlessly integrating persistent storage with container orchestration systems such as Kubernetes is key to achieving high availability and dynamic scalability. This article introduces how to leverage Ceph’s RBD (RADOS Block Device) to provide persistent storage support for Nginx deployed in Kubernetes, forming a stable architecture for a system.

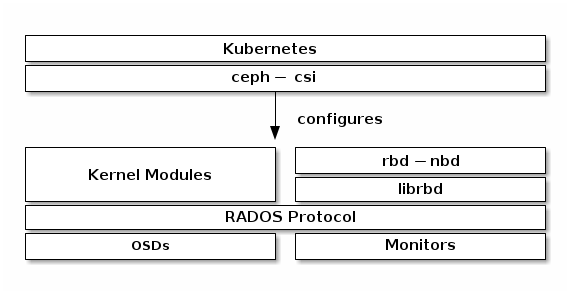

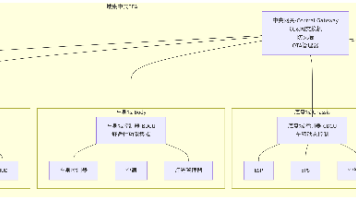

2. System Architecture Diagram

To use Ceph Block Devices with Kubernetes v1.13 and higher, you must install and configure ceph-csi within your Kubernetes environment. The following diagram depicts the Kubernetes/Ceph technology stack.

3. Environment Preparation

3.1 Ceph Cluster

- The Ceph cluster is deployed and the Ceph RBD feature is enabled.

- The ceph-csi component has been installed and configured (required for mounting RBD in Kubernetes).

3.2 Kubernetes Cluster

- The Kubernetes cluster is deployed, with a recommended version of 1.20+.

- CSI plugin support is enabled.

- ceph-common is installed on the nodes.

Lab Environments:

| No. | Host Name | IP Address | Linux OS | Ceph Version | CPU/RAM | Software |

|---|---|---|---|---|---|---|

| #1 | ceph-admin-120 | 192.168.126.120 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #2 | ceph-mon-121 | 192.168.126.121 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #3 | ceph-mon-122 | 192.168.126.122 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #4 | ceph-mon-123 | 192.168.126.123 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #5 | ceph-osd-124 | 192.168.126.124 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #6 | ceph-osd-125 | 192.168.126.125 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #7 | ceph-osd-126 | 192.168.126.126 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #8 | ceph-mds-127 | 192.168.126.127 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #9 | ceph-mds-128 | 192.168.126.128 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #10 | ceph-mds-129 | 192.168.126.129 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #11 | ceph-rgw-130 | 192.168.126.130 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #12 | ceph-rgw-131 | 192.168.126.131 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #13 | ceph-rgw-132 | 192.168.126.132 | Ubuntu 22.04 LTS | 18.2.5 | 4Cores/8G | Ceph |

| #14 | master | 192.168.126.100 | Ubuntu 22.04 LTS | 1.25 | 4Cores/8G | kubernetes |

| #15 | node1 | 192.168.126.101 | Ubuntu 22.04 LTS | 1.25 | 4Cores/8G | kubernetes |

| #16 | node2 | 192.168.126.102 | Ubuntu 22.04 LTS | 1.25 | 4Cores/8G | kubernetes |

| #17 | node3 | 192.168.126.103 | Ubuntu 22.04 LTS | 1.25 | 4Cores/8G | kubernetes |

| #18 | Vault Server | 192.168.126.99 | Ubuntu 22.04 LTS | 28 | 4Cores/8G | docker & HashiCorp vault |

4. Deployment Process

Part 1: Ceph Preparation

1. Create a pool in the Ceph cluster.

By default, Ceph block devices use this pool. Create a pool for Kubernetes volume storage. Ensure that your Ceph cluster is running, and then create the pool.

root@ceph-admin-120:~# ceph osd pool create kubernetes 128 128

Initialization:

root@ceph-admin-120:~# rbd pool init kubernetes

2. Set up Ceph client authentication

root@ceph-admin-120:~# ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=kubernetes'

[client.kubernetes]

key = AQAZtRhosIoXJRAA8O13YWH+tvOz4Rz8dWDs3w==

Run the following command to check if the user’s permissions are set correctly:

root@ceph-admin-120:~# ceph auth get client.kubernetes

[client.kubernetes]

key = AQAZtRhosIoXJRAA8O13YWH+tvOz4Rz8dWDs3w==

caps mon = "profile rbd"

caps osd = "profile rbd pool=kubernetes"

Explanation:

- The ceph auth get-or-create command creates or retrieves the authentication credentials for the client.kubernetes user, granting it permissions to interact with Ceph’s rbd profile for the monitor (mon) and object storage daemon (osd), with access to the kubernetes pool.

- The ceph auth get command checks the permissions and key assigned to client.kubernetes, confirming that it has the correct access rights (profile rbd for monitors and OSDs with the kubernetes pool).

3. Get the Ceph cluster information

root@ceph-admin-120:~# ceph mon dump

epoch 3

fsid 091a4f92-1cc4-11f0-9f6e-27ecaf2da6bd

last_changed 2025-04-19T02:32:34.168788+0000

created 2025-04-19T02:16:40.381125+0000

min_mon_release 18 (reef)

election_strategy: 1

0: [v2:192.168.126.121:3300/0,v1:192.168.126.121:6789/0] mon.ceph-mon-121

1: [v2:192.168.126.123:3300/0,v1:192.168.126.123:6789/0] mon.ceph-mon-123

2: [v2:192.168.126.122:3300/0,v1:192.168.126.122:6789/0] mon.ceph-mon-122

dumped monmap epoch 3

Part 2: Kubernetes & Vault Preparation

1. Deploy the Ceph CSI driver in the Kubernetes cluster

The Kubernetes StorageClass establishes a connection to the Ceph cluster via the CSI (Container Storage Interface) client.

root@master:~/ceph# kubectl create namespace ceph

This creates a dedicated namespace named ceph for managing Ceph-related resources in Kubernetes.

2. Prepare the ConfigMap resource for Ceph CSI

2.1 ConfigMap for the Ceph cluster

root@master:~/ceph# cat csi-configmap.yaml

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

[

{

"clusterID": "091a4f92-1cc4-11f0-9f6e-27ecaf2da6bd",

"monitors": [

"192.168.126.121:6789",

"192.168.126.122:6789",

"192.168.126.123:6789"

]

}

]

metadata:

name: ceph-csi-config

namespace: ceph

root@master:~/ceph# kubectl apply -f csi-configmap.yaml

This step involves creating a ConfigMap that contains configuration details about the Ceph cluster, such as monitor endpoints and cluster ID (fsid). The Ceph CSI driver uses this configuration to locate and interact with the cluster.

2.2 Define the ConfigMap resource for the KMS (Key Management Service)

Step 1: Run the Vault container (in development mode)

root@vault server:~# docker run --cap-add=IPC_LOCK -e 'VAULT_DEV_ROOT_TOKEN_ID=bXktc2VjcmV0LXRva2Vu' \

-e 'VAULT_DEV_LISTEN_ADDRESS=0.0.0.0:8200' \

-p 8200:8200 --name vault-dev -d hashicorp/vault:1.15.5

This command launches Vault in development mode, listening on localhost:8200, and sets the root token to bXktc2VjcmV0LXRva2Vu. Development mode is not secure and should only be used for testing purposes.

Step 2: Configure Vault for use with Ceph CSI

You can use the Vault CLI or its HTTP API on the host machine to perform the necessary setup.

root@vault server:~# docker exec -it 1075081eaf2a sh

/ #

/ # export VAULT_ADDR=http://127.0.0.1:8200

/ # export VAULT_TOKEN=myroot

/ #

/ #

/ # vault secrets enable -path=kv kv

Success! Enabled the kv secrets engine at: kv/

/ #

/ #

/ #

/ # vault auth enable kubernetes

Success! Enabled kubernetes auth method at: kubernetes/

/ #

/ #

/ # vault policy write ceph-csi-kms-policy - <<EOF

> path "kv/data/csi/*" {

> capabilities = ["create", "read", "update", "delete", "list"]

> }

> EOF

Success! Uploaded policy: ceph-csi-kms-policy

/ # exit

Step 3: Define the Key Management Service (KMS)

The latest versions of Ceph CSI require an additional ConfigMap that specifies details of the KMS provider (e.g., HashiCorp Vault) so that Ceph CSI can integrate with it for volume encryption. You can find more complete examples in the official Ceph CSI GitHub repository:

https://github.com/ceph/ceph-csi/tree/master/examples/kms

root@master:~/ceph# cat csi-kms-config-map.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: ceph-csi-encryption-kms-config

namespace: ceph

data:

config.json: |-

{

"encryptionKMSType": "vault",

"vaultAddress": "http://192.168.126.99:8200",

"vaultBackendPath": "kv",

"vaultSecretPrefix": "csi/",

"vaultToken": "myroot"

}

root@master:~/ceph#kubectl apply -f csi-kms-config-map.yaml

2.3 Ceph Configuration ConfigMap Resource

The ceph-config-map.yaml file is used to define the configuration for the Ceph cluster that Ceph CSI will interact with. This ConfigMap provides necessary information like the Ceph cluster’s authentication requirements and other configuration parameters.

Here’s the content of the ceph-config-map.yaml file:

root@master:~/ceph# cat ceph-config-map.yaml

apiVersion: v1

kind: ConfigMap

data:

ceph.conf: |

[global]

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

# keyring is a required key and its value should be empty

keyring: |

metadata:

name: ceph-config

namespace: ceph

root@master:~/ceph# kubectl apply -f ceph-config-map.yaml

3. Authorize Ceph CSI to access the K8S cluster using RBAC permissions

Ceph CSI will deploy two components in the Kubernetes cluster: csi-provisioner and csi-nodeplugin. Both components need to be granted appropriate RBAC (Role-Based Access Control) permissions to interact with the Kubernetes API.

3.1 Download the RBAC resource manifest for the CSI client

Ceph CSI provides a set of predefined RBAC resources for csi-provisioner and csi-nodeplugin. You can download the manifest that defines the necessary roles and bindings from the official Ceph CSI repository or use the following commands to fetch the resources.

For example, you can download the RBAC resources using:

root@master:~/ceph# wget https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-provisioner-rbac.yaml

root@master:~/ceph# wget https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-nodeplugin-rbac.yaml

3.2 Create RBAC resources in the K8S cluster

Before creating the RBAC resources, ensure that the default namespace default is replaced with ceph to match the namespace where the Ceph CSI components (such as csi-provisioner and csi-nodeplugin) are deployed.

root@master:~/ceph# kubectl apply -f csi-provisioner-rbac.yaml

root@master:~/ceph# kubectl apply -f csi-nodeplugin-rbac.yaml

4. Deploy the Ceph CSI Plugin in the K8S Cluster

Before deploying the Ceph CSI plugin, make sure that the default namespace (default) is replaced with the ceph namespace to match the configuration.

root@master:~/ceph# wget https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-rbdplugin-provisioner.yaml

root@master:~/ceph# kubectl apply -f csi-rbdplugin-provisioner.yaml

root@master:~/ceph# wget https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-rbdplugin.yaml

root@master:~/ceph# kubectl apply -f csi-rbdplugin.yaml

Part 3: RBD Block Storage Preparation

Before using Ceph CSI with Kubernetes, you need to ensure that the relevant Ceph commands are installed on all Kubernetes nodes. These commands will help Kubernetes interact with the Ceph cluster for tasks such as provisioning volumes and managing RBD (Ceph Block Devices).

1. Install Ceph Commands on Each Kubernetes Node

root@node1:~# apt update && apt install ceph-common -y

root@node2:~# apt update && apt install ceph-common -y

root@node3:~# apt update && apt install ceph-common -y

2. Store the User Information for Accessing RBD Block Storage in a Secret Resource

To securely store the Ceph RBD access credentials, you should create a Secret resource in Kubernetes. This will store the Ceph client (userID) and its authentication key (userKey), allowing the Kubernetes cluster to authenticate and access the RBD block devices through Ceph.

root@master:~/ceph# cat csi-rbd-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: csi-rbd-secret

namespace: ceph

stringData:

userID: kubernetes

userKey: AQAZtRhosIoXJRAA8O13YWH+tvOz4Rz8dWDs3w==

root@master:~/ceph# kubectl apply -f csi-rbd-secret.yaml

3. Create a StorageClass Resource Controller

In Kubernetes, a StorageClass resource defines how storage is provisioned dynamically, and it can be configured to interact with external storage systems, such as Ceph RBD (Ceph block devices).

To create a StorageClass that will manage the provisioning of Ceph RBD-backed volumes, we will define the necessary parameters that tell Kubernetes how to communicate with the Ceph cluster and provision volumes.

root@master:~/ceph# cat rbd-storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rbd-storage # The name of the StorageClass resource.

provisioner: rbd.csi.ceph.com # The CSI driver used for provisioning (Ceph RBD in this case).

parameters:

clusterID: 091a4f92-1cc4-11f0-9f6e-27ecaf2da6bd # The unique ID of the Ceph cluster. You can get this from `ceph mon dump`.

pool: kubernetes # The Ceph pool in which the RBD volumes will be created.

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret # The name of the Secret resource containing the Ceph authentication credentials.

csi.storage.k8s.io/provisioner-secret-namespace: ceph # The namespace where the Secret resource is located.

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret # The Secret for the node to access the storage during mounting.

csi.storage.k8s.io/node-stage-secret-namespace: ceph # The namespace of the Secret for node-level mounting.

imageFormat: "2" # Specifies the image format version for the RBD volume (format 2 is typically used for newer features).

imageFeatures: "layering" # Enables the "layering" feature for the RBD image, which is useful for snapshots and cloning.

reclaimPolicy: Delete # Defines the reclaim policy. When a PVC is deleted, the associated RBD volume will be deleted as well.

mountOptions:

- discard # Enables the discard option to allow freeing unused space on the volume.

root@master:~/ceph# kubectl apply rbd-storageclass.yaml

4. Dynamic Volume Provisioning

Below is the YAML configuration for creating a PVC that will dynamically provision a PV using the rbd-storage StorageClass.

root@master:~/ceph# cat rbd-sc-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-sc-pvc # Name of the PVC.

namespace: ceph # The namespace in which this PVC will reside.

spec:

accessModes:

- ReadWriteOnce # This access mode must be used for Ceph RBD.

volumeMode: Filesystem # Set to Filesystem mode.

resources:

requests:

storage: 10Gi # Request 1Gi of storage.

storageClassName: rbd-storage # Reference to the StorageClass that defines Ceph RBD as the volume backend.

root@master:~/ceph# kubectl apply -f rbd-sc-pvc.yaml

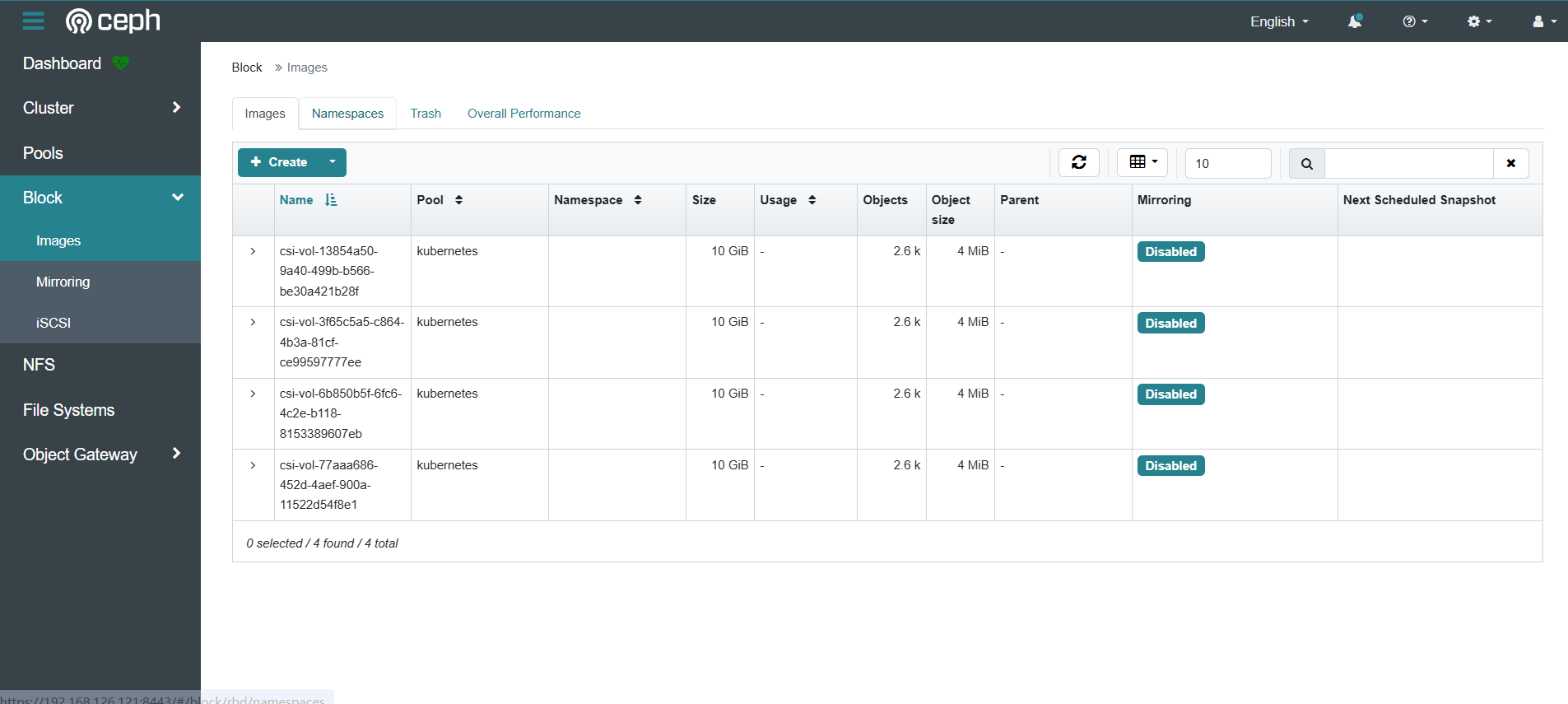

5. Check the Block Storage in the Ceph Resource Pool

To confirm that the corresponding block storage device has been created in the Ceph resource pool, you can check the RBD (Rados Block Device) in the Ceph cluster.

root@node2:~# sudo rbd showmapped

root@node2:~# rbd -p kubernetes ls

root@node2:~# rbd info kubernetes/csi-vol-4b8e7a87-d431-42ea-ab24-5ae177eab485

Part 4: Deploying Nginx Service

1. Deploying Nginx Pods with PVC Storage Volumes using StatefulSet Controller

root@master:~/ceph# cat rbd-nginx-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nginx-statefulset

namespace: ceph

spec:

serviceName: "rbd-nginx"

replicas: 3

selector:

matchLabels:

app: rbd-nginx

template:

metadata:

labels:

app: rbd-nginx

spec:

containers:

- name: nginx

image: nginx:1.20

ports:

- name: web

containerPort: 80

protocol: TCP

volumeMounts:

- name: web-data

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: web-data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "rbd-storage"

resources:

requests:

storage: 10Gi

root@master:~/ceph# kubectl apply -f rbd-nginx-statefulset.yaml

2. Verifying Ceph RBD PVC Persistence with Nginx StatefulSet

You’ve verified the PVC mounting by writing data into the /usr/share/nginx/html/index.html file, which is accessible via curl.

root@master:~/ceph# kubectl -n ceph get pod

NAME READY STATUS RESTARTS AGE

csi-rbdplugin-ktbfq 3/3 Running 0 113m

csi-rbdplugin-provisioner-6d767d5fb9-7xd4t 7/7 Running 0 113m

csi-rbdplugin-provisioner-6d767d5fb9-vz9xc 7/7 Running 0 113m

csi-rbdplugin-provisioner-6d767d5fb9-w79fq 7/7 Running 0 113m

csi-rbdplugin-rm6j9 3/3 Running 0 113m

csi-rbdplugin-sw8wq 3/3 Running 0 113m

nginx-statefulset-0 1/1 Running 0 84m

nginx-statefulset-1 1/1 Running 0 84m

nginx-statefulset-2 1/1 Running 0 84m

root@master:~/ceph#

root@master:~/ceph# kubectl -n ceph exec -it nginx-statefulset-0 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@nginx-statefulset-0:/#

root@nginx-statefulset-0:/# cd /usr/share/nginx/html/

root@nginx-statefulset-0:/usr/share/nginx/html# ls

index.html lost+found

root@nginx-statefulset-0:/usr/share/nginx/html# echo "Hello nginx, this is a test sample for ceph storage." > index.html

root@nginx-statefulset-0:/usr/share/nginx/html#

root@nginx-statefulset-0:/usr/share/nginx/html# curl localhost

Hello nginx, this is a test sample for ceph storage.

root@nginx-statefulset-0:/usr/share/nginx/html#

root@ceph-mon-121:~# rbd -p kubernetes ls

csi-vol-13854a50-9a40-499b-b566-be30a421b28f

csi-vol-3f65c5a5-c864-4b3a-81cf-ce99597777ee

csi-vol-6b850b5f-6fc6-4c2e-b118-8153389607eb

csi-vol-77aaa686-452d-4aef-900a-11522d54f8e1

root@ceph-mon-121:~# rbd -p kubernetes ls

csi-vol-13854a50-9a40-499b-b566-be30a421b28f

csi-vol-3f65c5a5-c864-4b3a-81cf-ce99597777ee

csi-vol-6b850b5f-6fc6-4c2e-b118-8153389607eb

csi-vol-77aaa686-452d-4aef-900a-11522d54f8e1

root@ceph-mon-121:~#

root@ceph-mon-121:~#

root@ceph-mon-121:~#

root@ceph-mon-121:~# rbd info kubernetes/csi-vol-3f65c5a5-c864-4b3a-81cf-ce99597777ee

rbd image 'csi-vol-3f65c5a5-c864-4b3a-81cf-ce99597777ee':

size 10 GiB in 2560 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 3bb6a34bfef68

block_name_prefix: rbd_data.3bb6a34bfef68

format: 2

features: layering

op_features:

flags:

create_timestamp: Tue May 6 13:36:43 2025

access_timestamp: Tue May 6 13:36:43 2025

modify_timestamp: Tue May 6 13:36:43 2025

root@ceph-mon-121:~#

root@ceph-mon-121:~#

root@ceph-mon-121:~#

root@ceph-mon-121:~# ceph osd map postgresql rbd_data.3bb6a34bfef68

osdmap e5391 pool 'postgresql' (37) object 'rbd_data.3bb6a34bfef68' -> pg 37.59042690 (37.10) -> up ([5,2,0], p5) acting ([5,2,0], p5)

root@ceph-mon-121:~#

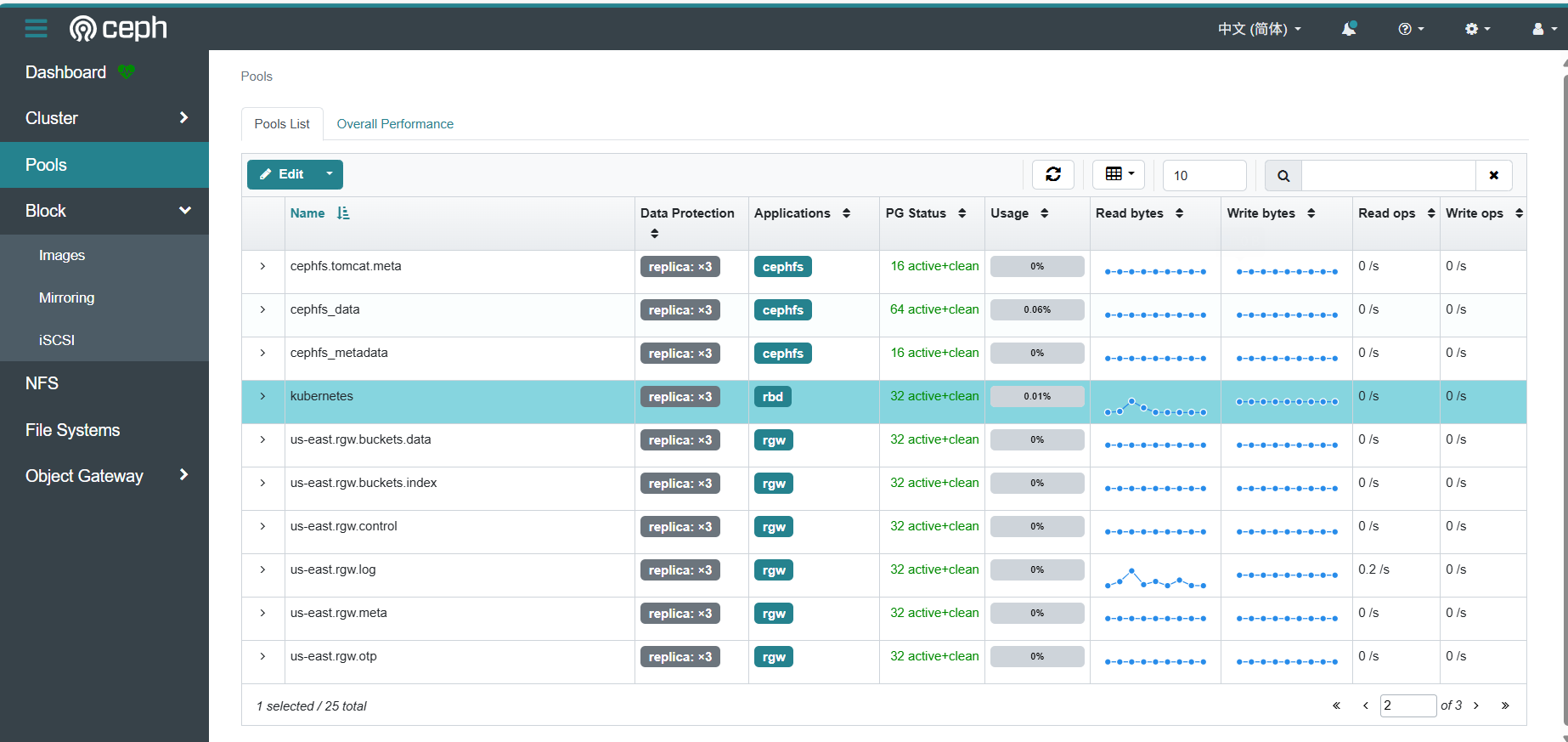

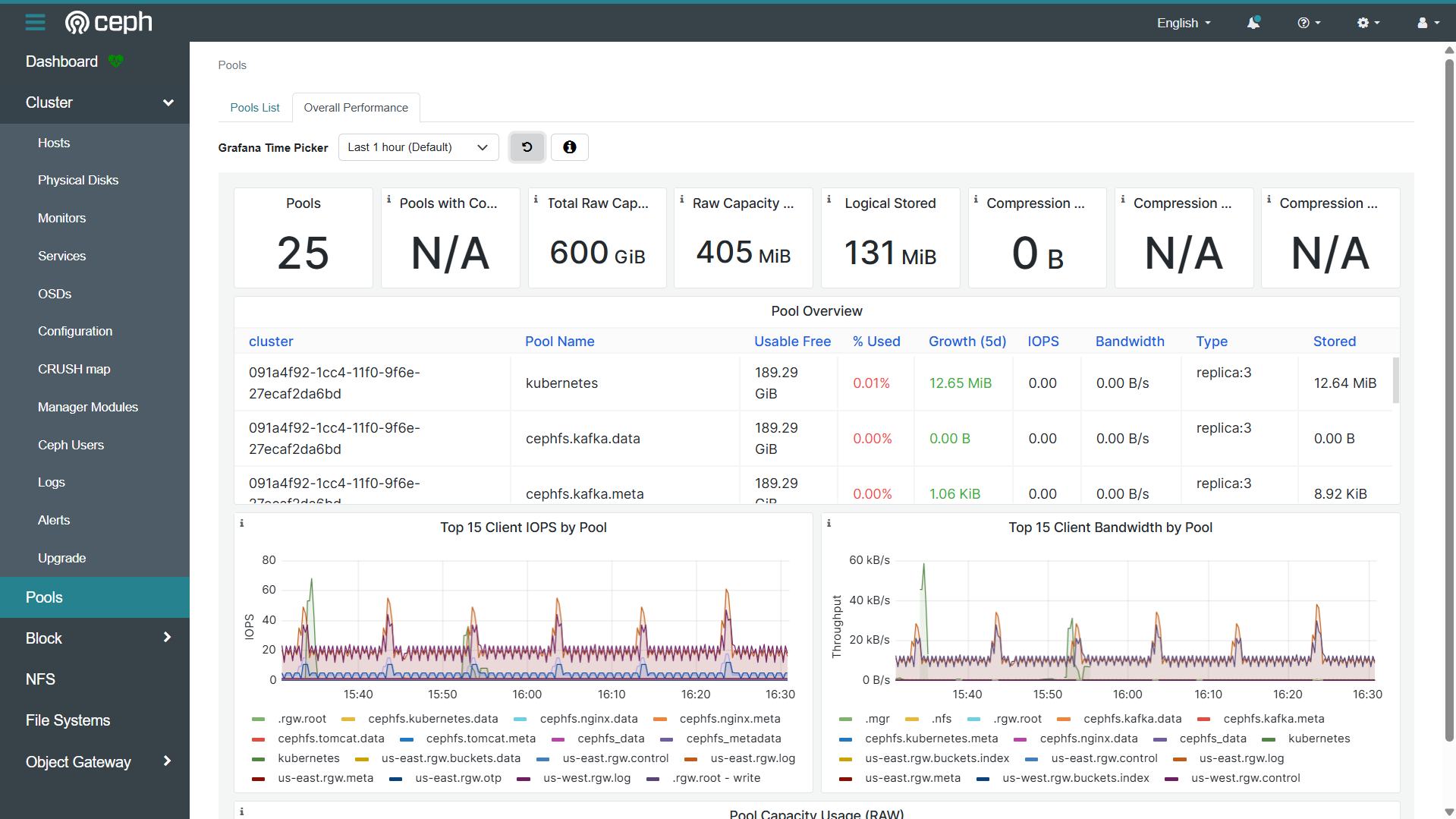

3. Access Ceph Dashboard

- https://192.168.126.121:8443/#/pool

4. Check Ceph Cluster Health

root@ceph-admin-120:~# ceph -s

cluster:

id: 091a4f92-1cc4-11f0-9f6e-27ecaf2da6bd

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-mon-121,ceph-mon-123,ceph-mon-122 (age 3h)

mgr: ceph-mon-121.lecujw(active, since 3h), standbys: ceph-mon-122.fqawgp

mds: 5/5 daemons up, 6 standby

osd: 6 osds: 6 up (since 3h), 6 in (since 2w)

rgw: 3 daemons active (3 hosts, 2 zones)

data:

volumes: 5/5 healthy

pools: 25 pools, 785 pgs

objects: 1.59k objects, 164 MiB

usage: 1.7 GiB used, 598 GiB / 600 GiB avail

pgs: 785 active+clean

io:

client: 11 KiB/s rd, 21 op/s rd, 0 op/s wr

root@ceph-admin-120:~#

root@ceph-admin-120:~# ceph orch host ls

HOST ADDR LABELS STATUS

ceph-mds-127 192.168.126.127 mds

ceph-mds-128 192.168.126.128 mds

ceph-mds-129 192.168.126.129 mds

ceph-mon-121 192.168.126.121 _admin,mon

ceph-mon-122 192.168.126.122 mon

ceph-mon-123 192.168.126.123 mon

ceph-nfs-133 192.168.126.133 nfs

ceph-nfs-134 192.168.126.134 nfs

ceph-nfs-135 192.168.126.135 nfs

ceph-osd-124 192.168.126.124 osd

ceph-osd-125 192.168.126.125 osd

ceph-osd-126 192.168.126.126 osd

ceph-rgw-130 192.168.126.130 rgw

ceph-rgw-131 192.168.126.131 rgw

ceph-rgw-132 192.168.126.132 rgw

15 hosts in cluster

root@ceph-admin-120:~#

root@ceph-admin-120:~# ceph orch device ls

HOST PATH TYPE DEVICE ID SIZE AVAILABLE REFRESHED REJECT REASONS

ceph-mds-127 /dev/sdb hdd 100G Yes 7m ago

ceph-mds-127 /dev/sdc hdd 100G Yes 7m ago

ceph-mds-128 /dev/sdb hdd 100G Yes 7m ago

ceph-mds-128 /dev/sdc hdd 100G Yes 7m ago

ceph-mds-129 /dev/sdb hdd 100G Yes 7m ago

ceph-mds-129 /dev/sdc hdd 100G Yes 7m ago

ceph-mon-121 /dev/sdb hdd 100G Yes 7m ago

ceph-mon-122 /dev/sdb hdd 100G Yes 7m ago

ceph-mon-123 /dev/sdb hdd 100G Yes 7m ago

ceph-nfs-133 /dev/sdb hdd 100G Yes 7m ago

ceph-nfs-133 /dev/sdc hdd 100G Yes 7m ago

ceph-nfs-134 /dev/sdb hdd 100G Yes 7m ago

ceph-nfs-134 /dev/sdc hdd 100G Yes 7m ago

ceph-nfs-135 /dev/sdb hdd 100G Yes 7m ago

ceph-nfs-135 /dev/sdc hdd 100G Yes 7m ago

ceph-osd-124 /dev/sdb hdd 100G Yes 7m ago

ceph-osd-124 /dev/sdc hdd 100G Yes 7m ago

ceph-osd-125 /dev/sdb hdd 100G Yes 7m ago

ceph-osd-125 /dev/sdc hdd 100G Yes 7m ago

ceph-osd-126 /dev/sdb hdd 100G Yes 7m ago

ceph-osd-126 /dev/sdc hdd 100G Yes 7m ago

ceph-rgw-130 /dev/sdb hdd 100G Yes 7m ago

ceph-rgw-130 /dev/sdc hdd 100G Yes 7m ago

ceph-rgw-131 /dev/sdb hdd 100G Yes 7m ago

ceph-rgw-131 /dev/sdc hdd 100G Yes 7m ago

ceph-rgw-132 /dev/sdb hdd 100G Yes 7m ago

ceph-rgw-132 /dev/sdc hdd 100G Yes 7m ago

root@ceph-admin-120:~#

root@ceph-admin-120:~# ceph orch ps

NAME HOST PORTS STATUS REFRESHED AGE MEM USE MEM LIM VERSION IMAGE ID CONTAINER ID

alertmanager.ceph-mon-121 ceph-mon-121 *:9093,9094 running (3h) 19s ago 2w 31.9M - 0.25.0 c8568f914cd2 3c9e20a01283

ceph-exporter.ceph-mds-127 ceph-mds-127 running (3h) 2m ago 2w 24.2M - 18.2.6 d4baa6a728fa 9c82dc9033cc

ceph-exporter.ceph-mds-128 ceph-mds-128 running (3h) 2m ago 2w 17.7M - 18.2.6 d4baa6a728fa 34d9e39b348a

ceph-exporter.ceph-mds-129 ceph-mds-129 running (3h) 20s ago 2w 23.2M - 18.2.6 d4baa6a728fa 0f418595f029

ceph-exporter.ceph-mon-121 ceph-mon-121 running (3h) 19s ago 2w 10.7M - 18.2.6 d4baa6a728fa 515da7ba28db

ceph-exporter.ceph-mon-122 ceph-mon-122 running (3h) 2m ago 2w 15.5M - 18.2.6 d4baa6a728fa d78521f84b8d

ceph-exporter.ceph-mon-123 ceph-mon-123 running (3h) 2m ago 2w 16.5M - 18.2.6 d4baa6a728fa 3a31bfac4f18

ceph-exporter.ceph-nfs-133 ceph-nfs-133 running (3h) 8m ago 10d 13.6M - 18.2.6 d4baa6a728fa 86aee26c66f1

ceph-exporter.ceph-nfs-134 ceph-nfs-134 running (3h) 8m ago 9d 20.1M - 18.2.6 d4baa6a728fa 2079e73b2e3c

ceph-exporter.ceph-nfs-135 ceph-nfs-135 running (3h) 8m ago 9d 22.8M - 18.2.6 d4baa6a728fa 21bf8a26a3f7

ceph-exporter.ceph-osd-124 ceph-osd-124 running (3h) 2m ago 2w 30.5M - 18.2.6 d4baa6a728fa cb07e5d8bd79

ceph-exporter.ceph-osd-125 ceph-osd-125 running (3h) 2m ago 2w 15.7M - 18.2.6 d4baa6a728fa 7246640afe70

ceph-exporter.ceph-osd-126 ceph-osd-126 running (3h) 2m ago 2w 30.1M - 18.2.6 d4baa6a728fa dc9c0f28daa2

ceph-exporter.ceph-rgw-130 ceph-rgw-130 running (3h) 18s ago 2w 18.6M - 18.2.6 d4baa6a728fa 8bad037a204c

ceph-exporter.ceph-rgw-131 ceph-rgw-131 running (3h) 18s ago 2w 26.7M - 18.2.6 d4baa6a728fa ca89b2ad1a4e

ceph-exporter.ceph-rgw-132 ceph-rgw-132 running (3h) 18s ago 12d 22.7M - 18.2.6 d4baa6a728fa d4bd637d9c88

crash.ceph-mds-127 ceph-mds-127 running (3h) 2m ago 2w 21.8M - 18.2.6 d4baa6a728fa ffba36321f59

crash.ceph-mds-128 ceph-mds-128 running (3h) 2m ago 2w 24.6M - 18.2.6 d4baa6a728fa cf7b81b0d803

crash.ceph-mds-129 ceph-mds-129 running (3h) 20s ago 2w 24.7M - 18.2.6 d4baa6a728fa 6ad4b8a1c840

crash.ceph-mon-121 ceph-mon-121 running (3h) 19s ago 2w 19.2M - 18.2.6 d4baa6a728fa 3fb8c69d8317

crash.ceph-mon-122 ceph-mon-122 running (3h) 2m ago 2w 22.0M - 18.2.6 d4baa6a728fa 569a8b1c4903

crash.ceph-mon-123 ceph-mon-123 running (3h) 2m ago 2w 18.0M - 18.2.6 d4baa6a728fa 2e300a890fe6

crash.ceph-nfs-133 ceph-nfs-133 running (3h) 8m ago 10d 22.8M - 18.2.6 d4baa6a728fa a8571aeeb30a

crash.ceph-nfs-134 ceph-nfs-134 running (3h) 8m ago 9d 26.8M - 18.2.6 d4baa6a728fa 79c1466ac34f

crash.ceph-nfs-135 ceph-nfs-135 running (3h) 8m ago 9d 24.2M - 18.2.6 d4baa6a728fa a4255e5761ab

crash.ceph-osd-124 ceph-osd-124 running (3h) 2m ago 2w 17.5M - 18.2.6 d4baa6a728fa 875423c6ba8d

crash.ceph-osd-125 ceph-osd-125 running (3h) 2m ago 2w 21.2M - 18.2.6 d4baa6a728fa adeba0bbe097

crash.ceph-osd-126 ceph-osd-126 running (3h) 2m ago 2w 25.2M - 18.2.6 d4baa6a728fa 4515a0e491f1

crash.ceph-rgw-130 ceph-rgw-130 running (3h) 18s ago 2w 18.7M - 18.2.6 d4baa6a728fa eb3bc949f60b

crash.ceph-rgw-131 ceph-rgw-131 running (3h) 18s ago 2w 24.2M - 18.2.6 d4baa6a728fa 4d9f4f1636d0

crash.ceph-rgw-132 ceph-rgw-132 running (3h) 18s ago 12d 25.5M - 18.2.6 d4baa6a728fa 76631aa660b5

grafana.ceph-mon-121 ceph-mon-121 *:3000 running (3h) 19s ago 2w 163M - 9.4.7 954c08fa6188 8d137068c2fb

haproxy.nfs.my-nfs-ha.ceph-nfs-133.vtmecs ceph-nfs-133 *:2049,9001 running (3h) 8m ago 9d 4431k - 2.3.17-d1c9119 e85424b0d443 b30dd53fb9c3

haproxy.nfs.my-nfs-ha.ceph-nfs-134.ayjwby ceph-nfs-134 *:2049,9001 running (3h) 8m ago 9d 4440k - 2.3.17-d1c9119 e85424b0d443 d6d7f904f8e4

haproxy.nfs.my-nfs-ha.ceph-nfs-135.unpzje ceph-nfs-135 *:2049,9001 running (3h) 8m ago 9d 4123k - 2.3.17-d1c9119 e85424b0d443 ba7ad7cb47de

haproxy.rgw-ha.ceph-rgw-130.pbucrh ceph-rgw-130 *:8888,9002 running (3h) 18s ago 11d 12.5M - 2.3.17-d1c9119 e85424b0d443 0e48de12ae99

haproxy.rgw-ha.ceph-rgw-131.bpdxfo ceph-rgw-131 *:8888,9002 running (3h) 18s ago 11d 12.3M - 2.3.17-d1c9119 e85424b0d443 fe44bc498ded

haproxy.rgw-ha.ceph-rgw-132.qzesot ceph-rgw-132 *:8888,9002 running (3h) 18s ago 11d 12.2M - 2.3.17-d1c9119 e85424b0d443 b06a4ae8ee51

keepalived.nfs.my-nfs-ha.ceph-nfs-133.yjmqip ceph-nfs-133 running (3h) 8m ago 9d 15.0M - 2.2.4 4a3a1ff181d9 a93171069ddc

keepalived.nfs.my-nfs-ha.ceph-nfs-134.mmckol ceph-nfs-134 running (3h) 8m ago 9d 15.4M - 2.2.4 4a3a1ff181d9 424652a21e3f

keepalived.nfs.my-nfs-ha.ceph-nfs-135.dvrvkb ceph-nfs-135 running (3h) 8m ago 9d 15.3M - 2.2.4 4a3a1ff181d9 486600452fad

keepalived.rgw-ha.ceph-rgw-130.ievhiw ceph-rgw-130 running (3h) 18s ago 11d 15.1M - 2.2.4 4a3a1ff181d9 f92e00b209ba

keepalived.rgw-ha.ceph-rgw-131.wgqgsg ceph-rgw-131 running (3h) 18s ago 11d 15.2M - 2.2.4 4a3a1ff181d9 b17e96cc1193

keepalived.rgw-ha.ceph-rgw-132.gyawtm ceph-rgw-132 running (3h) 18s ago 11d 15.1M - 2.2.4 4a3a1ff181d9 5d16302b0cc6

mds.cephfs.ceph-mds-128.jgmcar ceph-mds-128 running (3h) 2m ago 2w 23.7M - 18.2.6 d4baa6a728fa 0a9d752caa74

mds.cephfs.ceph-osd-125.ciqjkf ceph-osd-125 running (3h) 2m ago 2w 43.1M - 18.2.6 d4baa6a728fa b91e282ca97a

mds.cephfs.ceph-osd-126.truoxe ceph-osd-126 running (3h) 2m ago 2w 24.3M - 18.2.6 d4baa6a728fa efb84557ed79

mds.kafka.ceph-mds-129.efahqw ceph-mds-129 running (3h) 20s ago 9d 28.7M - 18.2.6 d4baa6a728fa 6c062ec507fc

mds.kafka.ceph-rgw-131.vajyim ceph-rgw-131 running (3h) 18s ago 9d 25.0M - 18.2.6 d4baa6a728fa 8db722cbc1f9

mds.kubernetes.ceph-mds-128.eadcdz ceph-mds-128 running (3h) 2m ago 9d 29.0M - 18.2.6 d4baa6a728fa 6ee82238ccfe

mds.kubernetes.ceph-osd-124.sszgbp ceph-osd-124 running (3h) 2m ago 9d 24.1M - 18.2.6 d4baa6a728fa b98c981f5062

mds.nginx.ceph-mds-128.akqude ceph-mds-128 running (3h) 2m ago 9d 40.9M - 18.2.6 d4baa6a728fa 40c1fa5db5e2

mds.nginx.ceph-mon-122.skcdic ceph-mon-122 running (3h) 2m ago 9d 23.5M - 18.2.6 d4baa6a728fa 35559e398d06

mds.tomcat.ceph-mds-128.gzkutn ceph-mds-128 running (3h) 2m ago 9d 26.4M - 18.2.6 d4baa6a728fa 1a4f20a5e9ed

mds.tomcat.ceph-rgw-132.jonjxu ceph-rgw-132 running (3h) 18s ago 9d 25.7M - 18.2.6 d4baa6a728fa 74fb863d532d

mgr.ceph-mon-121.lecujw ceph-mon-121 *:8443,9283,8765 running (3h) 19s ago 2w 703M - 18.2.6 d4baa6a728fa 9c430c0f74c5

mgr.ceph-mon-122.fqawgp ceph-mon-122 *:8443,9283,8765 running (3h) 2m ago 2w 531M - 18.2.6 d4baa6a728fa b2c03a9af82d

mon.ceph-mon-121 ceph-mon-121 running (3h) 19s ago 2w 390M 2048M 18.2.6 d4baa6a728fa a61a5b3d5567

mon.ceph-mon-122 ceph-mon-122 running (3h) 2m ago 2w 355M 2048M 18.2.6 d4baa6a728fa a52b73f0f58c

mon.ceph-mon-123 ceph-mon-123 running (3h) 2m ago 2w 371M 2048M 18.2.6 d4baa6a728fa 2e5f7af4a659

nfs.my-nfs-ha.0.0.ceph-nfs-134.xjaltd ceph-nfs-134 *:2048 running (3h) 8m ago 3h 60.0M - 5.9 d4baa6a728fa 56f4ee1aa902

nfs.my-nfs-ha.1.0.ceph-nfs-135.bshlhd ceph-nfs-135 *:2048 running (3h) 8m ago 3h 62.8M - 5.9 d4baa6a728fa 6f343a6ed26b

nfs.my-nfs-ha.2.0.ceph-nfs-133.llwrlu ceph-nfs-133 *:2048 running (3h) 8m ago 3h 62.3M - 5.9 d4baa6a728fa 9c41e70ca22c

node-exporter.ceph-mds-127 ceph-mds-127 *:9100 running (3h) 2m ago 2w 19.2M - 1.5.0 0da6a335fe13 68ccc14b4a29

node-exporter.ceph-mds-128 ceph-mds-128 *:9100 running (3h) 2m ago 2w 19.0M - 1.5.0 0da6a335fe13 2c2f55e51af9

node-exporter.ceph-mds-129 ceph-mds-129 *:9100 running (3h) 20s ago 2w 18.5M - 1.5.0 0da6a335fe13 4cf23fc7c656

node-exporter.ceph-mon-121 ceph-mon-121 *:9100 running (3h) 19s ago 2w 19.2M - 1.5.0 0da6a335fe13 c78acb55a6db

node-exporter.ceph-mon-122 ceph-mon-122 *:9100 running (3h) 2m ago 2w 18.7M - 1.5.0 0da6a335fe13 425becb488de

node-exporter.ceph-mon-123 ceph-mon-123 *:9100 running (3h) 2m ago 2w 18.7M - 1.5.0 0da6a335fe13 301ec2b95fbe

node-exporter.ceph-nfs-133 ceph-nfs-133 *:9100 running (3h) 8m ago - 19.4M - 1.5.0 0da6a335fe13 a97d2b7ad324

node-exporter.ceph-nfs-134 ceph-nfs-134 *:9100 running (3h) 8m ago 9d 19.7M - 1.5.0 0da6a335fe13 555e180aee1c

node-exporter.ceph-nfs-135 ceph-nfs-135 *:9100 running (3h) 8m ago 9d 19.0M - 1.5.0 0da6a335fe13 219c808a469e

node-exporter.ceph-osd-124 ceph-osd-124 *:9100 running (3h) 2m ago 2w 18.7M - 1.5.0 0da6a335fe13 e9ede8d822c6

node-exporter.ceph-osd-125 ceph-osd-125 *:9100 running (3h) 2m ago 2w 18.6M - 1.5.0 0da6a335fe13 3eb6e638a4d6

node-exporter.ceph-osd-126 ceph-osd-126 *:9100 running (3h) 2m ago 2w 19.2M - 1.5.0 0da6a335fe13 8153f5cde637

node-exporter.ceph-rgw-130 ceph-rgw-130 *:9100 running (3h) 18s ago 2w 18.8M - 1.5.0 0da6a335fe13 cb6bf1143439

node-exporter.ceph-rgw-131 ceph-rgw-131 *:9100 running (3h) 18s ago 2w 18.8M - 1.5.0 0da6a335fe13 a80040cb4e8b

node-exporter.ceph-rgw-132 ceph-rgw-132 *:9100 running (3h) 18s ago 12d 18.6M - 1.5.0 0da6a335fe13 a46b365caaa8

osd.0 ceph-osd-124 running (3h) 2m ago 2w 390M 4096M 18.2.6 d4baa6a728fa 57669dd3f783

osd.1 ceph-osd-124 running (3h) 2m ago 2w 297M 4096M 18.2.6 d4baa6a728fa 148241bb00c6

osd.2 ceph-osd-125 running (3h) 2m ago 2w 330M 4096M 18.2.6 d4baa6a728fa 757be4e920c9

osd.3 ceph-osd-125 running (3h) 2m ago 2w 370M 4096M 18.2.6 d4baa6a728fa e9206eeb5339

osd.4 ceph-osd-126 running (3h) 2m ago 2w 347M 4096M 18.2.6 d4baa6a728fa 2efa3ea59281

osd.5 ceph-osd-126 running (3h) 2m ago 2w 315M 4096M 18.2.6 d4baa6a728fa 4f72394f115b

prometheus.ceph-mon-121 ceph-mon-121 *:9095 running (3h) 19s ago 2w 189M - 2.43.0 a07b618ecd1d 29d63a47a28b

rgw.movies-rgw-east.ceph-rgw-130.qwjmaa ceph-rgw-130 *:80 running (3h) 18s ago 12d 194M - 18.2.6 d4baa6a728fa 9401990e41d4

rgw.movies-rgw-east.ceph-rgw-131.uybrrq ceph-rgw-131 *:80 running (3h) 18s ago 12d 207M - 18.2.6 d4baa6a728fa fc07069f6a94

rgw.movies-rgw-west.ceph-rgw-132.rvxiaa ceph-rgw-132 *:80 running (3h) 18s ago 12d 208M - 18.2.6 d4baa6a728fa 984b04cdfe0b

root@ceph-admin-120:~#

5. Check Kubernetes Cluster

root@master:~/ceph# kubectl -n ceph get pod

NAME READY STATUS RESTARTS AGE

csi-rbdplugin-ktbfq 3/3 Running 0 148m

csi-rbdplugin-provisioner-6d767d5fb9-7xd4t 7/7 Running 0 148m

csi-rbdplugin-provisioner-6d767d5fb9-vz9xc 7/7 Running 0 148m

csi-rbdplugin-provisioner-6d767d5fb9-w79fq 7/7 Running 0 148m

csi-rbdplugin-rm6j9 3/3 Running 0 148m

csi-rbdplugin-sw8wq 3/3 Running 0 148m

nginx-statefulset-0 1/1 Running 0 119m

nginx-statefulset-1 1/1 Running 0 119m

nginx-statefulset-2 1/1 Running 0 120m

root@master:~/ceph#

root@master:~/ceph# kubectl -n ceph get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

rbd-sc-pvc Bound pvc-5695d6fc-5386-4d43-8765-bb2d01da141f 10Gi RWO rbd-storage 148m

web-data-nginx-statefulset-0 Bound pvc-4ea3b5fe-a2ee-413d-af73-e8558f5d3589 10Gi RWO rbd-storage 134m

web-data-nginx-statefulset-1 Bound pvc-39962d28-da83-4ff4-a136-de7fcd266eed 10Gi RWO rbd-storage 134m

web-data-nginx-statefulset-2 Bound pvc-e845ed18-31af-4e07-bd85-04e374478ded 10Gi RWO rbd-storage 134m

root@master:~/ceph#

root@master:~/ceph# kubectl -n ceph get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

csi-metrics-rbdplugin ClusterIP 10.106.0.131 <none> 8080/TCP 148m

csi-rbdplugin-provisioner ClusterIP 10.107.246.145 <none> 8080/TCP 148m

rbd-nginx-nodeport NodePort 10.107.111.160 <none> 80:30060/TCP 119m

root@master:~/ceph#

root@master:~/ceph# kubectl -n ceph get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path rancher.io/local-path Delete WaitForFirstConsumer false 2y166d

nfs-storage (default) nfs-provisioner Delete Immediate false 75d

rbd-storage rbd.csi.ceph.com Delete Immediate false 148m

root@master:~/ceph#

root@master:~/ceph# ls -l

total 60

-rw-r--r-- 1 root root 287 May 6 05:01 ceph-config-map.yaml

-rw-r--r-- 1 root root 329 May 6 04:51 csi-configmap.yaml

-rw-r--r-- 1 root root 318 May 6 05:00 csi-kms-config-map.yaml

-rw-r--r-- 1 root root 1205 May 6 05:08 csi-nodeplugin-rbac.yaml

-rw-r--r-- 1 root root 4050 May 6 05:05 csi-provisioner-rbac.yaml

-rw-r--r-- 1 root root 9343 May 6 05:10 csi-rbdplugin-provisioner.yaml

-rw-r--r-- 1 root root 7731 May 6 05:11 csi-rbdplugin.yaml

-rw-r--r-- 1 root root 164 May 6 05:13 csi-rbd-secret.yaml

-rw-r--r-- 1 root root 264 May 6 05:55 nginx_service.yaml

-rw-r--r-- 1 root root 752 May 6 06:04 rbd-nginx-statefulset.yaml

-rw-r--r-- 1 root root 402 May 6 05:20 rbd-sc-pvc.yaml

-rw-r--r-- 1 root root 655 May 6 05:25 rbd-storageclass.yaml

root@master:~/ceph#

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)